This is a continuation of my personal responses to and musings upon the report Engage to Excel from the President’s Council of Advisors on Science and Technology (PCAST), this month focusing on the first recommendation:

- Catalyze widespread adoption of empirically validated teaching practices.

As the PCAST report points out, there are many techniques for improving classroom interaction that are known to improve student performance. Table 2 on page 17 of the report illustrates several of these and references the research literature. Their list includes small group discussion, one-minute papers, clickers, and problem-based learning.

The Physics Education Research (PER) community, through the American Association of Physics Teachers, has done a nice job of organizing a website of 51 Evidence-based teaching methods that have been demonstrated to be effective: PERusersguide.org. The site is organized to make it useful for the instructor: a brief description and each method and six searchable cross-listings that describe

- Level: the courses for which it is appropriate, usually introductory physics,

- Setting: whether designed for large lecture, small classes, labs, or recitation sections,

- Coverage: whether it requires studying fewer topics at greater depth,

- Effort: low, medium, or high,

- Resources: what is needed, from computer access to printed materials that must be purchased to classrooms with tables,

- Skills: what students are expected to acquire, usually including conceptual understanding, but also possibly problem-solving skills and laboratory skills.

In addition, each of the methods includes a list of the types of validation that have been conducted: what aspects of student learning were studied, what skills the method has been demonstrated to improve, and the nature of the research methods.

I wish for a comparable site for mathematics. Inevitably, a site developed by MAA and the Research in Undergraduate Mathematics Education (RUME) community would need to be both more comprehensive and more complex. Introductory physics is a relatively straightforward course with clear goals, a restricted clientele, and only two flavors: calculus or non-calculus based. In addition, most of the content is new to most of its students.

Introductory college-level mathematics is far more diverse and serves a broad set of disciplines that place often quite specific and disparate demands on these courses. On top of this, we in the mathematics community are plagued by the fact that almost nothing commonly taught in the first year, even Calculus I and II, is completely fresh to these incoming students. At the same time, too many of these students enter without the conceptual knowledge of mathematics and skill in using it that are needed to thrive in this first college course. For those of us who teach college-level mathematics, pressures for coverage are greater and gaps in student preparation are more acute and problematic than they are for introductory physics.

Compounding the difficulties of conducting research in methods of undergraduate mathematics is the fact that the RUME community is only one small part of Mathematics Education Research, which studies the learning of all mathematical knowledge beginning with early childhood recognition of small counting numbers as cardinalities. RUME fights for dollars and publication space against well-established research programs with methods of validation that have been honed over decades but are often inappropriate for understanding the complexities inherent in the learning of higher mathematics.

Nevertheless, the mathematical community does have research evidence for instructional strategies that work. There is a long history of studies of Emerging Scholars Programs, active learning strategies, and computer-aided instruction (see my column Lessons for Effective Teaching, November 2008). Sandra Laursen and her group at UC-Boulder have studied Inquiry Based Learning and documented its benefits (see my column The Best Way to Learn, August 2011).

Unfortunately, the experience of the physicists demonstrates that the existence of research based instructional strategies together with documentation of their effectiveness is not sufficient to guarantee their widespread adoption. Why not?

Again, the PER community is ahead of the RUME community in this regard. At the recent interdisciplinary conference, Transforming Research in Undergraduate Science Education (TRUSE), held at the University of Saint Thomas in Saint Paul, MN, June 3–7, Melissa Dancy of UC-Boulder spoke on Educational Transformation in STEM: Why has it been limited and how can it be accelerated?

Much of the work described in Dancy’s talk can be found in the preprint by Henderson, Dancy, and Niewiadomska-Bugaj [1]. This paper will be the topic of my August column. The work that they have done via surveys of physics faculty demonstrates that the greatest problem is not in making faculty aware of what has been done, or even in getting faculty to try different approaches to teaching. The greatest problem is in getting faculty to stick with these strategies.

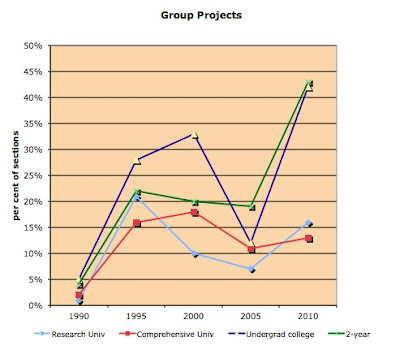

What Henderson et al. have to say resonates with my own experience. As I reported in Reform Fatigue, June 2007, the use of calculators, computers, writing assignments and group projects in Calculus rose during the 1990s, but dropped off sharply between 2000 and 2005. The 2010 survey conducted under MAA’s study of Characteristics of Successful Program in College Calculus showed a continuing but modest decline in the use of graphing calculators and computers. Yet there was a bright spot. The use of group projects has rebounded (see Graph 1). Much depends on the quality of the group projects and how they are used, but the data suggest that the mathematics community is not totally immune to empirically validated teaching practices.

[1] Henderson, C., M. Dancy, and M. Niewiadomska-Bugaj. 2012. The Use of Research-Based Instructional Strategies in Introductory Physics: Where do Faculty Leave the Innovation-Decision Process? submitted.

"Unfortunately, the experience of the physicists demonstrates that the existence of research based instructional strategies together with documentation of their effectiveness is not sufficient to guarantee their widespread adoption. Why not?"

ReplyDeleteBecause the curriculums that result from this alleged "research" appear very compromised when compared to the traditional curriculums that aspiring students are accustomed to. While they may offer a better hospice for the terminal student, they lack essential and key ingredients for the aspiring student, like rigor, detail, development and challenge.

As Misbah mentioned, the majority of students, in college even, are disinterested in these subjects. The education bubble has flooded a system of higher education that was originally designed for a very different type of student. An academically interested student. The performance of this flood as a whole, in these subjects, has been abysmal. I know of no other way to describe college calculus classes with passing scores on a final exam that range from the low 30's to 100. Let's be honest again.

This "research" is born of this flood and is infected by that disinterest. The resultant curriculums reek of it. You asked why and I am telling you why. You might not agree, but that is the answer to your question "Why not?". Because the curriculums look very wrong to us. Which leads me to the claim that these curriculums are backed by evidence. If I had you hand me a dollar bill and I cover it with a cloth and then when I remove the cloth, there are two dollar bills, you will, without a moment's hesitation, know I did a trick. Even though there are now two physical dollar bills in front of you, you will not be fooled, because it is simply impossible to make one dollar bill turn into two! And you will not accept any explanation from me except the truth. That I tricked you. So when you show me a curriculum that lacks the basic ingredients of rigor, detail and development, and you claim it is more successful than a traditional curriculum with those ingredients, I will not believe the impossible. And when you look at the purported evidence, you will find the trick. There is always a "slight of hand" in the argument. I've spent the last several years as a James Randi of sorts, looking for those tricks, and they are pretty obvious to me now. Usually it is just the reliable ole switch-a-roo, replacing the accepted notion of "success" with something entirely different, but done quickly as to be not noticed.

And finally, these alternative curriculums are doomed for a very fundamental reason. They don't produce advocates. Those ingredients in a traditional curriculum, like rigor, detail and development, are not just essential for aspiring students, they are critical for aspiring teachers. Not just professional teachers, any one of us that can carry the art of mathematics or physics or anything forward. The alternative "research based" curriculums are bent towards disinterested students and due to their lack of those ingredients, the students will never become advocates. 5 years later, 10 years later, 30 years later, you will never see those students near a blog like this. Just the sellers.

Bob Hansen

I have responded to Robert Hansen's comment in the following discussion llst post:

DeleteHake, R.R. 2012. " What Mathematicians Might Learn From Physicists: Response to Hansen" online on the OPEN AERA-L archives at http://bit.ly/NxE6kB.. Post of 18 Aug 2012 14:55:51-0700 to AERA-L and Net-Gold. The abstract and link to the complete post are being transmitted to several discussion lists and are also on my blog "Hake'sEdStuff" at http://bit.ly/MEwmvH with a provision for comments.